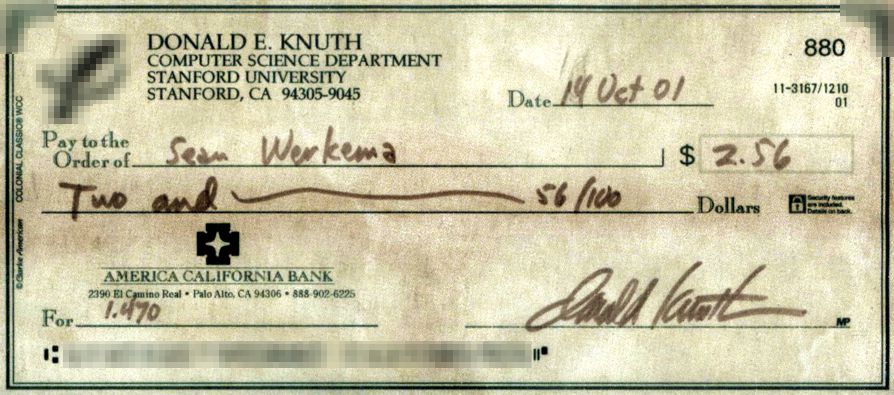

Twenty years ago today, I got a Knuth Check. Knuth dated it October 14, 2001, and I received it in the mail in early November that year, I think.

I’d heard, of course, about Knuth Checks, but I didn’t really know as much about them then as I do today. I was a kid a few years out of college, just trying to eke out a living building software; and when I found a mistake in an algorithm in his book, I intended to write a note in a short e-mail to Dr. Knuth, but I found out he was (wisely) avoiding the medium, so I sent him a letter. And a few months later, I got back a check.

It’s sat in a frame on my desk for twenty years, singularly my proudest professional accomplishment. I’ve shipped tons of software, I’ve made and discovered amazing things, I’ve won other awards — but nothing holds a candle to having a Knuth Check with your name on it.

(More accurately, the original is in my safe-deposit box at the bank, and a copy has sat in a frame for twenty years, because I’m not leaving something this valuable out on my desk! 😃)

So here’s the story:

The Art of Computer Programming, Volume 1, has an algorithm on page 26 that describes an efficient solution for calculating a fractional logarithm in binary, using pure integer math. The algorithm in question derives from one first published in 1624 by Henry Briggs. At the bottom of page 26 in the 3rd Edition, the book mentions that Richard Feynman (yes, that Feynman) determined an efficient inverse algorithm for calculating a fractional exponent in binary, and it proposes to the reader to solve it as problem #28.

As is typical of all of Knuth’s “problems for the reader,” he includes the answer in the rather large appendix that comprises the last 150 pages of the book. The solution to problem #28 can be found at the bottom of page 470, and, unfortunately, it is not the same algorithm that Feynman discovered: It has a critical error in the first step:

E1. [Initialize.] If 1 – ϵ is the largest possible value of x, set y ← (nearest approximation to b1-ϵ), x ← 1 – ϵ, k ← 1. (The quantity yb-x will remain approximately constant in the following steps.)

TAoCP, V1, 3rd ed., pp. 470

That has a rather unfortunate error in it: x ← 1 – ϵ is potentially a meaningful expression in that context, but it’s wrong, and it will calculate not the correct exponent but garbage data, with, as I recall, mostly zero bits and a few semi-randomly-distributed one bits.

The correct expression is x ← 1 – x. It’s possible the other form was a typographical error on Knuth’s part, but it’s not in the same category as a simple omission or spelling error, since if you follow the mathematics as written, you will simply get garbage, and not get a valid fractional exponent.

(I struggled for a few days with the original: It was Knuth; surely I’d been the one to make the mistake in my code, not the good Doctor in his! But ultimately when I began to experiment with the broken algorithm, the answer became obvious.)

So I wrote Knuth a letter in the spring of 2001 saying that I thought that was wrong as published, since it generated garbage output, and I included that I thought that x ← 1 – x was actually the correct form, since it appeared to be correct. And he sent me back a rather nice letter and a check for $2.56 for having found an error.

(I had also suggested that the inherent parallel execution implied by the commas in step E2 appeared to be wrong, that sequential execution appeared to be needed there; Knuth chastised me in the letter that no, parallel execution was in fact intended. But I still got an error check for a broken algorithm!)

Interestingly, I didn’t get my check by just searching for errors, like many people have: I was trying to build something; I encountered that error merely by remembering that the books contained an answer to the problem I was facing. But it seems that not many people build things by literally reading the textbook and doing exactly what it says; and apparently, nobody had implemented Feynman’s exponentiation algorithm from the book after it appeared in the 3rd Edition in 1997. [ed. note: I checked, and Knuth added it to the 3rd Ed. in April 1995; my error can be seen in his addenda.]

That said, an entertaining question to posit: Why the heck was I trying to implement fractional logarithms and exponents in the first place?

The answer was that it was 2001, and Windows 98 and ’486 PCs were still pretty common, and you couldn’t assume a computer supported floating-point math. And I needed to calculate powers: As simple as xy, just like on your calculator, and it was even constrained: In my case, x was always an integer, and y was always a number between 1 and 2. But I didn’t have guaranteed floating-point hardware available so I could just write pow(x, y). So I improvised: I used the Briggs and Feynman methods to calculate the logarithm and the exponent in pure integer math, so that I could implement calculation of a power as exp(y * log x) instead, and effectively use multiplication — which the hardware supported — to produce a power (which it definitely didn’t).

Today, of course, I’d write pow(x, y), just like you would. But when you’re stuck on super-limited hardware, you have to be a bit more clever.

(For what it’s worth, you can see the code live, in action, if you have a copy of SpaceMonger 2 or 3; just go into the Settings, and turn up the “Exaggeration” slider: Every file’s size will be raised to the power you choose, using exactly this math!)

Feynman, of course, derived that algorithm for exactly the same reason I needed it: They were using mechanical adding machines during WWII as part of the Manhattan Project, and those machines didn’t do powers. But the relativistic math for the atomic bomb needed lots of powers; so he derived the exponent algorithm as the converse to the logarithm algorithm, so that they were able to just use addition, subtraction, and multiplication, which the adding machines could do, to still efficiently calculate powers, which the machines couldn’t.

So that’s my Knuth Check story. It’s unlikely I’ll ever earn another; I certainly haven’t in the last twenty years! I still haven’t even finished reading Volume 4A yet, and I’m far too busy to randomly pore over the other tomes again just in search of a mistake. But I still managed to earn a prize that’s among the most coveted in this field, and twenty years later, I’m still happy to have done it even once.